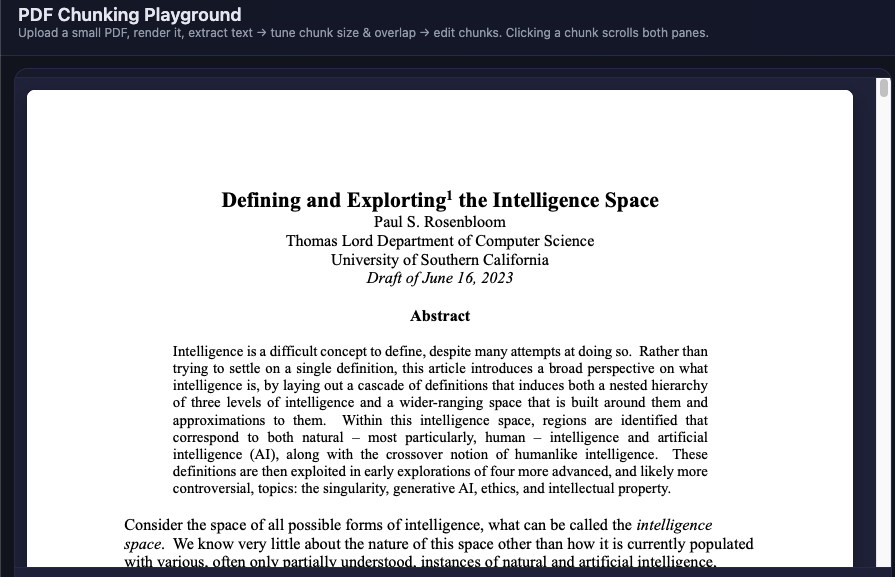

Document Ingestion

Let’s demystify “giving documents to an LLM.” We’ll walk through the exact steps our playground app performs (render → extract → chunk → embed → retrieve), then show clear upgrade paths for accuracy, speed, and stability. This is entirely in the browser to give the curious developer a kicking off point to understand the fundamentals of document ingestion for LLM context engineering.

Nagivate to the full playground here

Upload a document

I start by uploading a file. In this explainer, the app ingests PDFs.

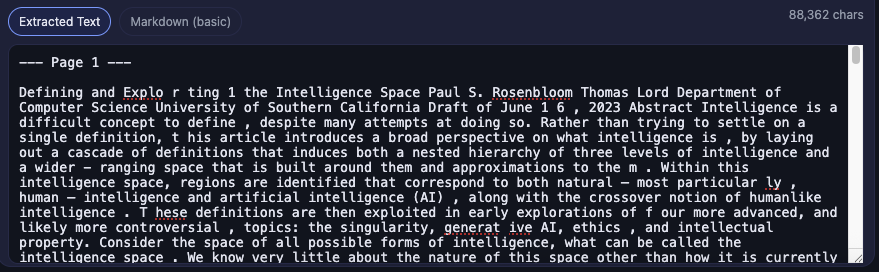

Extract what’s inside

I pull out the elements (primarily text) and preserve useful structure:

- Pages (for citations)

- Paragraphs

- Headings / sections (for meaning and navigation)

If you need high‑fidelity parsing (scanned PDFs, tables, multi‑column layouts), swap in a stronger extractor later with no change to the rest of the flow.

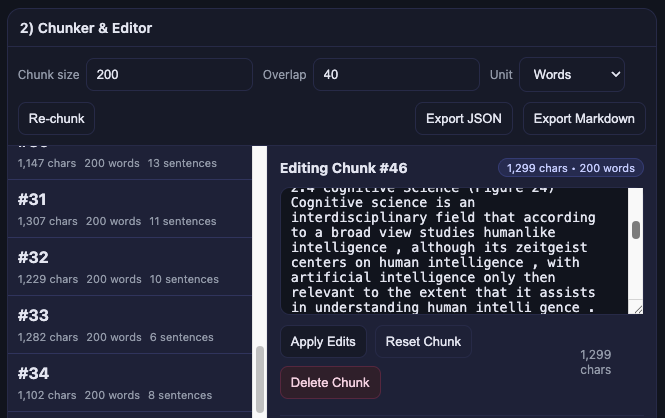

Split into chunks

I break the extracted text into smaller pieces so we can retrieve only what’s relevant.

Heuristics that work well for prose

- Size: around 400 (think ≈400 tokens; roughly 800–1,600 characters)

- Overlap: about 15% to keep thoughts intact across boundaries

- The more semantic the split (ends of sentences/paragraphs/sections), the better the downstream results

In the playground You can chunk by characters, words, or sentences. Start simple, then move toward semantic chunking as needed.

Review the chunks

Skim to make sure the chunks and overlaps make logical sense (no mid‑sentence cuts, tables split in half, etc.). Clicking a chunk scrolls both the extracted text and the PDF page to the right spot.

Turn chunks into embeddings

- An embedding is a numeric fingerprint of meaning. Similar text → similar vectors.

- In the playground: I use a lightweight browser model so everything runs locally.

- In production: call an embeddings API that fits your data (e.g., legal, medical, support). Store the vector and metadata (doc, page, section) for citations later.

Search

Search by meaning (vector search)

- I embed the user’s query and find the Top‑K most similar chunks.

- Top K (vec): how many results to keep (also helps manage token usage when sending to the LLM)

- Min vec: minimum similarity score to filter weak, off‑topic matches

Search by words (keyword / phrase)

- Semantic search can miss exact strings (product codes, IDs, proper nouns). To cover that, I also build a reverse index for keyword search:

- Exact phrase: literal substring match (case‑insensitive)

- BM25: classic keyword ranking when you don’t want strict exactness

Hybrid search (best of both)

I combine both result sets using RRF (Reciprocal Rank Fusion) so that a highly relevant item from either vector or keyword search rises to the top. This hybrid list is what we feed into the LLM as extra context.

Send to the LLM (with receipts)

I provide the top chunks, along with their page/section references. Manually Prompt the LLM to quote and cite. If similarity scores are too low, instruct it to respond with “not enough context” instead of guessing.

Why this works

- Clean extraction → better input

- Sensible chunking → better recall and less waste

- Good embeddings → better semantic matches

- Hybrid search → covers both meaning and exact terms

- Citations → trustworthy answers

Nice upgrades when you’re ready

- Better extractors (up to your internal pipeline like “Dolphin”) for layout, tables, and scanned pages

- Semantic chunking with an LLM (split by meaning, not just size)

- Domain‑tuned embeddings via API

- Hierarchical summaries (section/document‑level nodes) for fast, stable retrieval on long docs

- One‑stop frameworks (e.g., LlamaIndex) to assemble these strategies with less plumbing

The End-to-End Picture

Pipeline: Ingest → Extract → Normalize → Chunk → Embed → Index → Retrieve → Assemble Context → Generate → Evaluate/Monitor.

This PDF playground implements a minimal but working slice of this pipeline:

- Render PDFs with PDF.js and extract text

- Chunk by characters/words/sentences with overlap

- Embed chunks with a local MiniLM model

- Search via cosine similarity and preview result chunks

- Jump from any chunk to its position in the PDF (traceability)

Principle: start with something observable and end‑to‑end; then layer sophistication under measurement.

Quick Roadmap (Baseline → Better → Best)

| Stage | Baseline (In this playground) | Better | Best |

|---|---|---|---|

| Extract | PDF.js text | Unstructured / GROBID / OCR | Layout‑aware + custom heuristics (e.g., “Dolphin”) |

| Clean | whitespace + page tags | de‑hyphen, boilerplate removal | doc‑type normalizers per template |

| Chunk | fixed windows | semantic + structure‑aware | parent‑child + hierarchical summaries |

| Embed | MiniLM‑L6‑v2 | bge‑base / e5‑large / gte‑base | domain or premium (text‑embedding‑3‑large) |

| Index | in‑mem | FAISS / pgvector | hybrid (BM25⊕vec) + HNSW/PQ + filters |

| Retrieve | top‑K cosine | MMR, query expansion | reranker (cross‑encoder), dynamic K |

| Context | concat | window stitch + cite | hierarchical assembly with parent summaries |

| Generate | plain prompt | scaffold + cite | verification + tool‑augmented answers |

| Evaluate | manual | eval sets + dashboards | canaries + regression gates |

Appendix A — How this ties to our PDF Playground

- Upload & Render: PDF.js draws canvases per page; we tag each with page ids for scrolling/highlighting.

- Extract: pull text items, normalize whitespace, insert page markers.

- Chunk: configurable unit (chars/words/sentences), size, overlap; each chunk stores source offsets.

- Jump: click a chunk to select its span in the text and scroll to the PDF page.

- Embed & Search: MiniLM embeddings in‑browser; cosine top‑K with min‑score filter and result list.

- Export: save chunks to JSON/Markdown for downstream use.

Next features we can add to the app to match the whitepaper:

- OCR for scanned PDFs; table extraction; figure captioning.

- Semantic/LLM chunker; parent‑child windows; summary node creation.

- Hybrid search (BM25 + vector) and reranking.

- Citation renderer that highlights exact PDF spans and section headers.

- Built‑in mini evaluation suite (seeded Q/A pairs, hit‑rate, nDCG, and faithfulness checks).

Appendix B — Practical Defaults & Checklists

Chunking defaults

- Size: 600–1,000 tokens; Overlap: 10–20%; Break on headings/bullets; Keep paragraphs intact.

Retrieval defaults

- K: 4–8; Min cosine: 0.3–0.4; MMR λ: 0.3; Rerank top 20–50 when needed.

Embedding ops

- L2‑normalize; track model name+version; re‑embed on model change; snapshot indices.

Prompting

- Require citations with page numbers; allow “I don’t know” on low‑score queries.

Security

- PII redaction where required; per‑tenant encryption keys; audit logs on retrieval events.