A Field Guide for Techincal Consultants

Building trust quickly and consistently is the cornerstone of successful consulting. This guide outlines practical wisdom and repeatable strategies drawn from seasoned Dialexa Engineering Leads to help consultants earn client trust, produce impact rapidly, and drive long-term success in complex delivery environments.

I. Core Principles of Trusted Advisorship

| Principle | Description |

|---|---|

| Be a Chef, Not a Cook | Customize solutions, don’t follow recipes. Show mastery by adapting to the client’s environment. |

| Spot Missed Opportunities | Build trust by finding overlooked wins—show you deeply understand the client’s business. |

| Win When They Win | Understand internal politics and pressures. Help your stakeholder succeed, and you will too. |

| Deliver Great Work, Fast | Execution builds credibility. Early momentum is the best sales strategy. |

| Earn Respect Daily | No entitlement—prove value with every interaction. |

| Collaborate, Don’t Confront | Never posture as an adversary. If tension arises, escalate early. |

| Involve the Client | Co-create, don’t prescribe. Solutions that don’t reflect the client’s input will fail. |

| Build Your Own Advisory Web | Surround yourself with teammates you can lean on, delegate to, and learn from. |

II. The Trusted Advisorship Process

Ramp Up

Research domain and technical context early to build confidence and insight.Trusted Introduction

Leverage existing trusted relationships for a warm intro when possible.Understand Problems, Not Just Solutions

- Focus on business pain and context—not prepackaged answers

- Show empathy and listening

- Avoid being “another consultant with a stack”

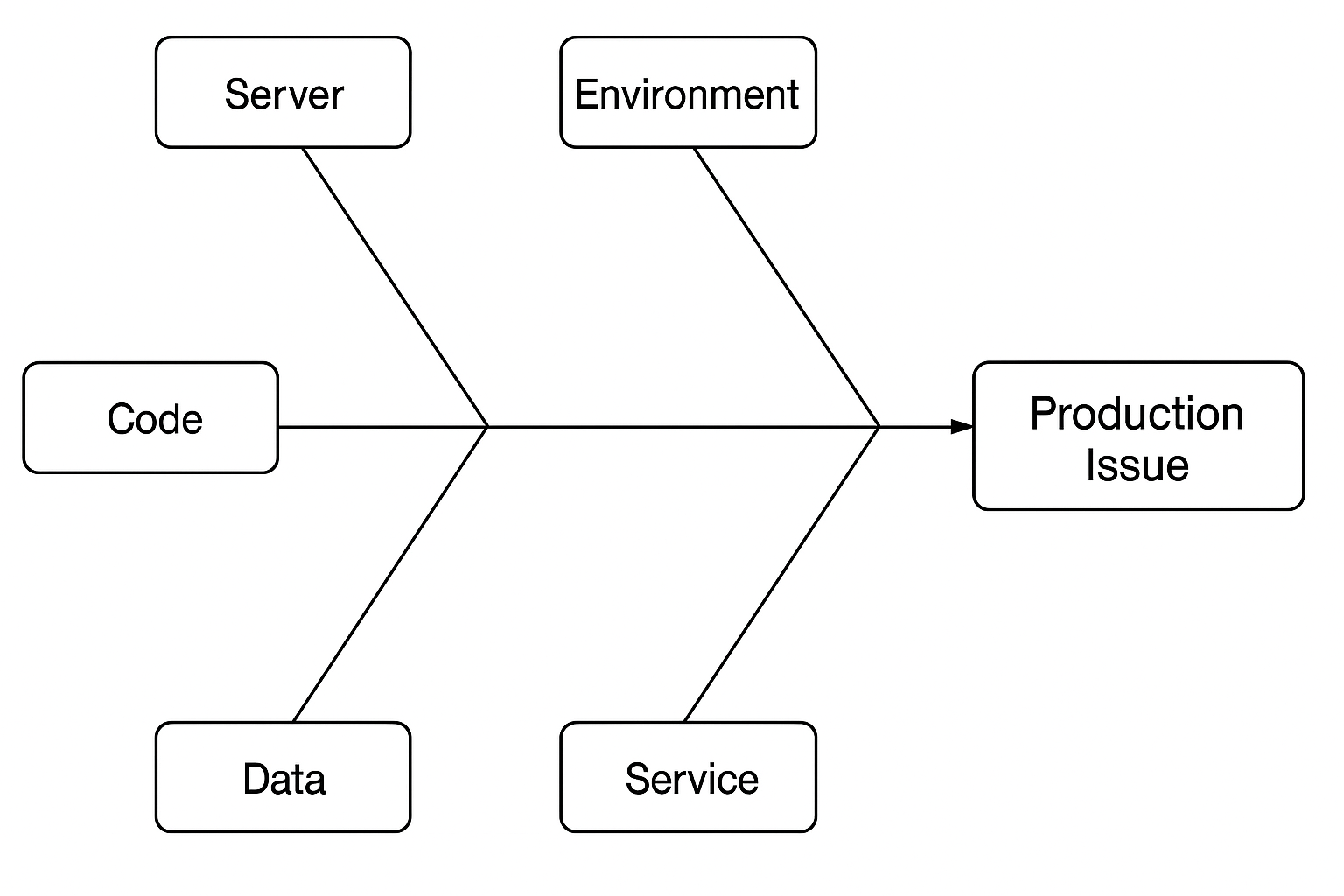

Assess & Hypothesize

- Dive deeper into root causes

- Define knowns and variables

- Involve stakeholders in strategy design

- Frame hypotheses from observed system patterns

Align on a Shared Goal

- Identify a small, high-impact scope for delivery

- Build momentum through working software

- Prove value to earn permission for larger problems

Create a Living Roadmap

- Offer transparency in timelines and tradeoffs

- Keep things flexible while reducing client anxiety

- Reinforce capability through clarity

Execute & Iterate

- Deliver early and often

- Build trust through results

- Show your work and adjust based on feedback

III. Stakeholder Personas & Strategies

| Persona | Traits | Strategy |

|---|---|---|

| Headstrong | Likes control, confident in their view | Be assertive, offer expertise, collaborate with strength |

| Consultant Trauma | Burned in past, emotional responses | Instill confidence, over-communicate, deliver quickly |

| Technical | Solution-forward, detail-heavy | Reframe back to problem, show data, Trojan Horse technique |

| Politician | Navigating internal pressures | Understand politics, craft low-cost wins for their career |

| Visionary | Grand ideas, budget-agnostic | Protect scope, speak to vision in strategic terms |

| Enterprise | Experienced, skeptical | Speak in their language, earn trust through quality and rigor |

IV. Project Environments

✅ Straightforward Projects

- Clear requirements, contained scope

- Execute efficiently and communicate proactively

🌀 Incomprehensible Projects

- Large, ambiguous, legacy, or political

- Focus on:

- Isolating knowns vs unknowns

- Parallelizing execution with discovery

- Establishing and testing strong hypotheses

- Delivering small wins to gain ground

V. Communication Framework

Leverage These other resources to better navigate stakeholder relationships:

Communication Styles

Learn to tailor your messaging to resonate with your audience.Types of Decision Makers

Understand the decision-making styles of stakeholders to influence effectively.

Closing Thought

Trusted advisorship isn’t earned through credentials—it’s earned through empathy, insight, and execution. Be humble, be sharp, and be indispensable.